Authored by Naveen Athrappully via The Epoch Times (emphasis ours),

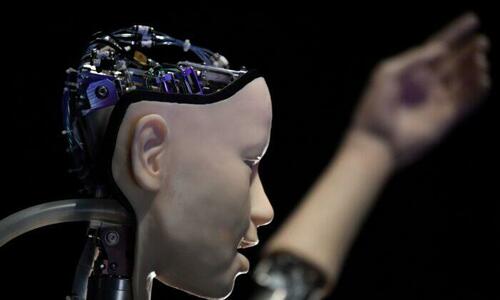

Human beings are not ready for a powerful AI under present conditions or even in the “foreseeable future,” stated a foremost expert in the field, adding that the recent open letter calling for a six-month moratorium on developing advanced artificial intelligence is “understating the seriousness of the situation.”

“The key issue is not ‘human-competitive’ intelligence (as the open letter puts it); it’s what happens after AI gets to smarter-than-human intelligence,” said Eliezer Yudkowsky, a decision theorist and leading AI researcher in a March 29 Time magazine op-ed. “Many researchers steeped in these issues, including myself, expect that the most likely result of building a superhumanly smart AI, under anything remotely like the current circumstances, is that literally everyone on Earth will die.

“Not as in ‘maybe possibly some remote chance,’ but as in ‘that is the obvious thing that would happen.’ It’s not that you can’t, in principle, survive creating something much smarter than you; it’s that it would require precision and preparation and new scientific insights, and probably not having AI systems composed of giant inscrutable arrays of fractional numbers.”

After the recent popularity and explosive growth of ChatGPT, several business leaders and researchers, now totaling 1,843 including Elon Musk and Steve Wozniak, signed a letter calling on “all AI labs to immediately pause for at least 6 months the training of AI systems more powerful than GPT-4.” GPT-4, released in March, is the latest version of OpenAI’s chatbot, ChatGPT.

AI ‘Does Not Care’ and Will Demand Rights

Yudkowsky predicts that in the absence of meticulous preparation, the AI will have vastly different demands from humans, and once self-aware will “not care for us” nor any other sentient life. “That kind of caring is something that could in principle be imbued into an AI but we are not ready and do not currently know how.” This is the reason why he’s calling for the absolute shutdown.

Without a human approach to life, the AI will simply consider all sentient beings to be “made of atoms it can use for something else.” And there is little humanity can do to stop it. Yudkowsky compared the scenario to “a 10-year-old trying to play chess against Stockfish 15.” No human chess player has yet been able to beat Stockfish, which is considered an impossible feat.

The industry veteran asked readers to imagine AI technology as not being contained within the confines of the internet.

“Visualize an entire alien civilization, thinking at millions of times human speeds, initially confined to computers—in a world of creatures that are, from its perspective, very stupid and very slow.”

The AI will expand its influence outside the periphery of physical networks and could “build artificial life forms” using laboratories where proteins are produced using DNA strings.

The end result of building an all-powerful AI, under present conditions, would be the death of “every single member of the human species and all biological life on Earth,” he warned.

Read more here...

Authored by Naveen Athrappully via The Epoch Times (emphasis ours),

Human beings are not ready for a powerful AI under present conditions or even in the “foreseeable future,” stated a foremost expert in the field, adding that the recent open letter calling for a six-month moratorium on developing advanced artificial intelligence is “understating the seriousness of the situation.”

“The key issue is not ‘human-competitive’ intelligence (as the open letter puts it); it’s what happens after AI gets to smarter-than-human intelligence,” said Eliezer Yudkowsky, a decision theorist and leading AI researcher in a March 29 Time magazine op-ed. “Many researchers steeped in these issues, including myself, expect that the most likely result of building a superhumanly smart AI, under anything remotely like the current circumstances, is that literally everyone on Earth will die.

“Not as in ‘maybe possibly some remote chance,’ but as in ‘that is the obvious thing that would happen.’ It’s not that you can’t, in principle, survive creating something much smarter than you; it’s that it would require precision and preparation and new scientific insights, and probably not having AI systems composed of giant inscrutable arrays of fractional numbers.”

After the recent popularity and explosive growth of ChatGPT, several business leaders and researchers, now totaling 1,843 including Elon Musk and Steve Wozniak, signed a letter calling on “all AI labs to immediately pause for at least 6 months the training of AI systems more powerful than GPT-4.” GPT-4, released in March, is the latest version of OpenAI’s chatbot, ChatGPT.

AI ‘Does Not Care’ and Will Demand Rights

Yudkowsky predicts that in the absence of meticulous preparation, the AI will have vastly different demands from humans, and once self-aware will “not care for us” nor any other sentient life. “That kind of caring is something that could in principle be imbued into an AI but we are not ready and do not currently know how.” This is the reason why he’s calling for the absolute shutdown.

Without a human approach to life, the AI will simply consider all sentient beings to be “made of atoms it can use for something else.” And there is little humanity can do to stop it. Yudkowsky compared the scenario to “a 10-year-old trying to play chess against Stockfish 15.” No human chess player has yet been able to beat Stockfish, which is considered an impossible feat.

The industry veteran asked readers to imagine AI technology as not being contained within the confines of the internet.

“Visualize an entire alien civilization, thinking at millions of times human speeds, initially confined to computers—in a world of creatures that are, from its perspective, very stupid and very slow.”

The AI will expand its influence outside the periphery of physical networks and could “build artificial life forms” using laboratories where proteins are produced using DNA strings.

The end result of building an all-powerful AI, under present conditions, would be the death of “every single member of the human species and all biological life on Earth,” he warned.

Read more here…

Loading…