Authored by Elijah Cohen via TheMindUnleashed.com,

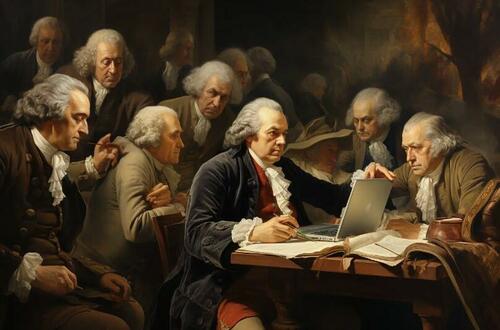

AI writing detectors are flagging the U.S. Constitution, one of America’s most significant legal documents, as a piece of AI-generated text. This intriguing controversy raises many questions, most importantly: How could a document, written centuries before the advent of AI, be mistaken as AI-generated?

AI writing detectors, such as ChatGPT, are designed to identify text produced by AI models. When these detectors analyze the U.S. Constitution, they often conclude that it was likely written by an AI.

This is, of course, impossible unless James Madison, one of the key drafters of the Constitution, had access to time travel and chatGPT. So, what is the reason behind these false positives?

The use of AI writing tools, particularly generative AI like ChatGPT, has stirred up a whirlwind in the educational sector. There’s a growing concern that these tools could disrupt traditional teaching methods, particularly the use of essays to assess a student’s understanding of a topic. In response, educators are turning to AI writing detectors, such as GPTZero, ZeroGPT, and OpenAI’s Text Classifier, in an attempt to maintain the status quo. However, these tools have proven to be unreliable due to their propensity for false positives.

For instance, when GPTZero is fed a section of the U.S. Constitution, it declares that the text is “likely to be written entirely by AI.” Similar results have been observed with other AI detectors, leading to a wave of confusion and humor about the possibility of the founding fathers being robots. Even religious texts, like sections from The Bible, have been flagged as AI-generated.

To comprehend why these tools make such glaring errors, we need to delve into the underlying principles of AI detection. AI writing detectors use AI models that have been trained on a vast body of text, along with a set of rules to determine if the writing is human or AI-generated. For example, GPTZero uses a neural network trained on a diverse corpus of human-written and AI-generated text. It then uses properties like “perplexity” and “burstiness” to evaluate the text.

Perplexity, in the context of machine learning, measures how much a piece of text deviates from what an AI model has learned during its training. The idea is that AI models like ChatGPT, when generating text, will naturally gravitate towards what they know best, which comes from their training data. The closer the output is to the training data, the lower the perplexity rating. However, humans can also write with low perplexity, especially when imitating a formal style used in law or certain types of academic writing.

In other words, just because something sounds generic, doesn’t mean it comes from AI, and that seems to be how these programs are judging things.

This phenomenon underscores the limitations of AI writing detectors and the need for a more reliable solution, especially in the educational sector.

Diving deeper into the topic of AI-generated writing, we find that it has gained popularity due to advancements in machine learning models like GPT-4. These models can generate human-like text, making them useful in various fields, from content creation to customer service. However, this has also raised concerns about authenticity and originality, especially in academia, where the use of AI-generated writing could be considered plagiarism.

The rise of AI-generated writing has also led to the development of AI writing detectors. These tools aim to distinguish between human and AI-generated text, a task that is becoming increasingly challenging due to the sophistication of modern AI models. However, as we’ve seen with the U.S. Constitution example, these tools often produce false positives.

The development of more accurate AI writing detectors is crucial. These tools need to consider the nuances of human writing, including style, tone, and context. They also need to be continually updated to keep pace with advancements in AI writing technology.

In conclusion, while AI-generated writing presents exciting opportunities, it also poses challenges. The development of reliable AI writing detectors is a critical step towards addressing these challenges and ensuring the integrity of written content in the digital age.

Authored by Elijah Cohen via TheMindUnleashed.com,

AI writing detectors are flagging the U.S. Constitution, one of America’s most significant legal documents, as a piece of AI-generated text. This intriguing controversy raises many questions, most importantly: How could a document, written centuries before the advent of AI, be mistaken as AI-generated?

AI writing detectors, such as ChatGPT, are designed to identify text produced by AI models. When these detectors analyze the U.S. Constitution, they often conclude that it was likely written by an AI.

This is, of course, impossible unless James Madison, one of the key drafters of the Constitution, had access to time travel and chatGPT. So, what is the reason behind these false positives?

The use of AI writing tools, particularly generative AI like ChatGPT, has stirred up a whirlwind in the educational sector. There’s a growing concern that these tools could disrupt traditional teaching methods, particularly the use of essays to assess a student’s understanding of a topic. In response, educators are turning to AI writing detectors, such as GPTZero, ZeroGPT, and OpenAI’s Text Classifier, in an attempt to maintain the status quo. However, these tools have proven to be unreliable due to their propensity for false positives.

For instance, when GPTZero is fed a section of the U.S. Constitution, it declares that the text is “likely to be written entirely by AI.” Similar results have been observed with other AI detectors, leading to a wave of confusion and humor about the possibility of the founding fathers being robots. Even religious texts, like sections from The Bible, have been flagged as AI-generated.

To comprehend why these tools make such glaring errors, we need to delve into the underlying principles of AI detection. AI writing detectors use AI models that have been trained on a vast body of text, along with a set of rules to determine if the writing is human or AI-generated. For example, GPTZero uses a neural network trained on a diverse corpus of human-written and AI-generated text. It then uses properties like “perplexity” and “burstiness” to evaluate the text.

Perplexity, in the context of machine learning, measures how much a piece of text deviates from what an AI model has learned during its training. The idea is that AI models like ChatGPT, when generating text, will naturally gravitate towards what they know best, which comes from their training data. The closer the output is to the training data, the lower the perplexity rating. However, humans can also write with low perplexity, especially when imitating a formal style used in law or certain types of academic writing.

In other words, just because something sounds generic, doesn’t mean it comes from AI, and that seems to be how these programs are judging things.

This phenomenon underscores the limitations of AI writing detectors and the need for a more reliable solution, especially in the educational sector.

Diving deeper into the topic of AI-generated writing, we find that it has gained popularity due to advancements in machine learning models like GPT-4. These models can generate human-like text, making them useful in various fields, from content creation to customer service. However, this has also raised concerns about authenticity and originality, especially in academia, where the use of AI-generated writing could be considered plagiarism.

The rise of AI-generated writing has also led to the development of AI writing detectors. These tools aim to distinguish between human and AI-generated text, a task that is becoming increasingly challenging due to the sophistication of modern AI models. However, as we’ve seen with the U.S. Constitution example, these tools often produce false positives.

The development of more accurate AI writing detectors is crucial. These tools need to consider the nuances of human writing, including style, tone, and context. They also need to be continually updated to keep pace with advancements in AI writing technology.

In conclusion, while AI-generated writing presents exciting opportunities, it also poses challenges. The development of reliable AI writing detectors is a critical step towards addressing these challenges and ensuring the integrity of written content in the digital age.

Loading…