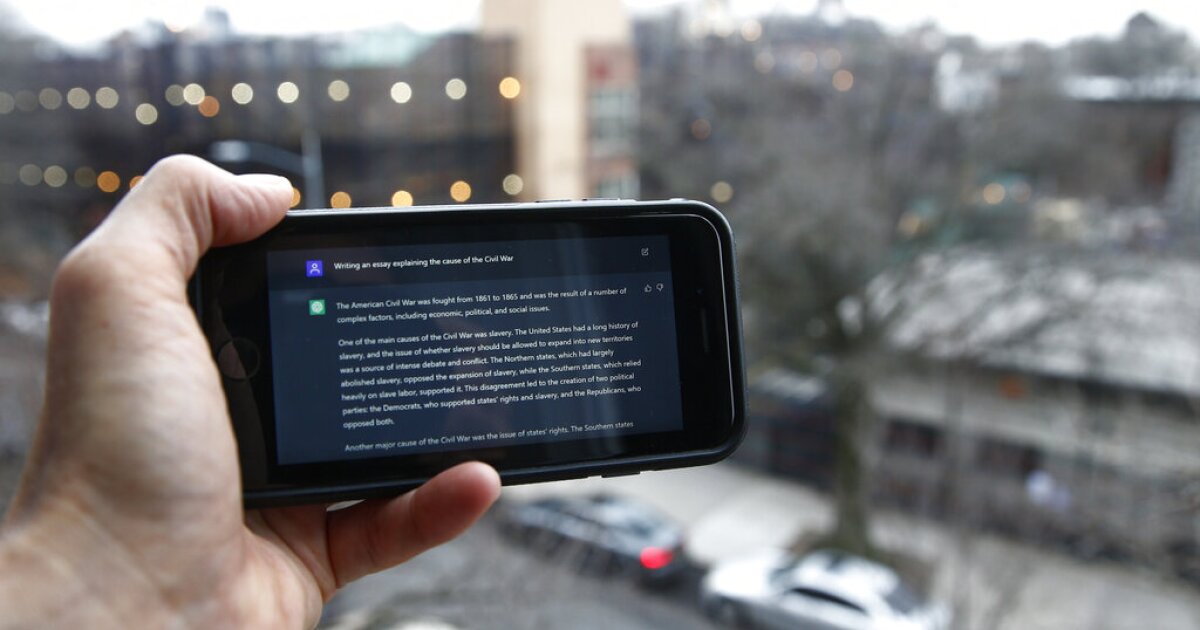

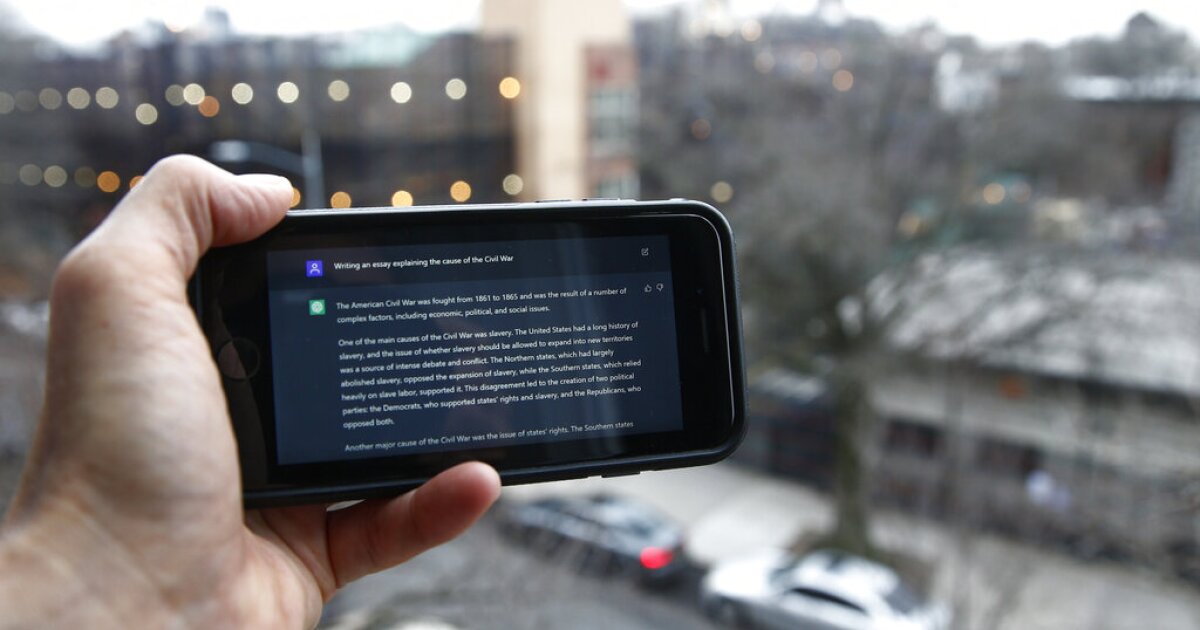

Google is testing tools to help journalists by taking information about current events and turning them into articles. The Big Tech giant is the latest group to try to apply generative artificial intelligence to journalism. Yet the efforts so far have resulted in the publication of high-profile factual errors.

Here are several of the companies whose AI-powered publishing tests became a factual mess.

STUDIO USE OF AI TO RECREATE ACTORS IS STICKING POINT IN HOLLYWOOD STRIKES

Gizmodo

G/O Media, the publishers of websites including Gizmodo and Jezebel, published four articles in the last two weeks that were entirely written by ChatGPT and Bard. One of the most notable examples was a chronological history of Star Wars that had several errors included and was not reviewed by an editor.

The articles infuriated the company’s staff, who slammed the piece. G/O Media’s reliance on AI is “unethical and unacceptable,” the company union tweeted. “If you see a byline ending in ‘Bot,’ don’t click it.”

Company leadership defended the decision, arguing that it was a necessary test. “I think it would be irresponsible to not be testing it,” G/O Media CEO Jim Spanfeller told Vox.

Men’s Journal

The Arena Group, which publishes Sports Illustrated, Parade, and Men’s Journal, announced in February that it would experiment with AI-written content to make more evergreen content such as health articles. The practice immediately backfired, with the magazine’s very first AI-generated article sporting multiple medical errors in an article about low testosterone.

Editors suddenly changed the article after Futurism contacted them for comment, making the article near-unrecognizable.

CNET

The technology website CNET began to experiment with producing AI-generated articles on a number of mundane issues, such as insurance policy rates. The website published several articles under the alias of one of its editors using the Responsible A.I. Machine Partner. The website published more than 70 articles written by AI in January.

A review of the published articles found that more than half of the published articles contained either factual errors or “phrases that were not entirely original,” implying plagiarism by the bot. Red Ventures, CNET’s publisher, temporarily paused the practice, stating it would review its AI publishing policy.

CLICK HERE TO READ MORE FROM THE WASHINGTON EXAMINER

The publisher announced in June that it would not publish entire stories using an AI tool and that it would ensure that reviews and product tests were done by humans. The tech outlet would “explore leveraging” AI for a number of organizational tasks, however, such as sorting data, creating outlines, and analyzing existing text.