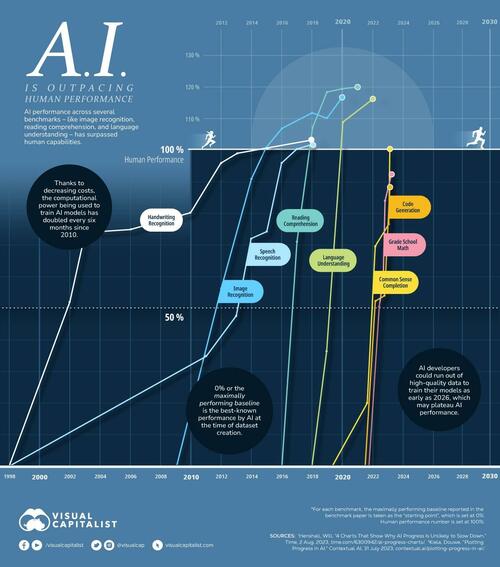

With ChatGPT’s explosive rise, AI has been making its presence felt for the masses, especially in traditional bastions of human capabilities - reading comprehension, speech recognition and image identification.

In fact, as Visual Capitalist's Mark Belan and Pallavi Rao show in the chart below, it’s clear that AI has surpassed human performance in quite a few areas, and looks set to overtake humans elsewhere.

How Performance Gets Tested

Using data from Contextual AI, we visualize how quickly AI models have started to beat database benchmarks, as well as whether or not they’ve yet reached human levels of skill.

Each database is devised around a certain skill, like handwriting recognition, language understanding, or reading comprehension, while each percentage score contrasts with the following benchmarks:

-

0% or “maximally performing baseline”

This is equal to the best-known performance by AI at the time of dataset creation. -

100%

This mark is equal to human performance on the dataset.

By creating a scale between these two points, the progress of AI models on each dataset could be tracked. Each point on a line signifies a best result and as the line trends upwards, AI models get closer and closer to matching human performance.

Below is a table of when AI started matching human performance across all eight skills:

A key observation from the chart is how much progress has been made since 2010. In fact many of these databases—like SQuAD, GLUE, and HellaSwag—didn’t exist before 2015.

In response to benchmarks being rendered obsolete, some of the newer databases are constantly being updated with new and relevant data points. This is why AI models technically haven’t matched human performance in some areas (grade school math and code generation) yet—though they are well on their way.

What’s Led to AI Outperforming Humans?

But what has led to such speedy growth in AI’s abilities in the last few years?

Thanks to revolutions in computing power, data availability, and better algorithms, AI models are faster, have bigger datasets to learn from, and are optimized for efficiency compared to even a decade ago.

This is why headlines routinely talk about AI language models matching or beating human performance on standardized tests. In fact, a key problem for AI developers is that their models keep beating benchmark databases devised to test them, but still somehow fail real world tests.

Since further computing and algorithmic gains are expected in the next few years, this rapid progress is likely to continue. However, the next potential bottleneck to AI’s progress might not be AI itself, but a lack of data for models to train on.

With ChatGPT’s explosive rise, AI has been making its presence felt for the masses, especially in traditional bastions of human capabilities – reading comprehension, speech recognition and image identification.

In fact, as Visual Capitalist’s Mark Belan and Pallavi Rao show in the chart below, it’s clear that AI has surpassed human performance in quite a few areas, and looks set to overtake humans elsewhere.

How Performance Gets Tested

Using data from Contextual AI, we visualize how quickly AI models have started to beat database benchmarks, as well as whether or not they’ve yet reached human levels of skill.

Each database is devised around a certain skill, like handwriting recognition, language understanding, or reading comprehension, while each percentage score contrasts with the following benchmarks:

-

0% or “maximally performing baseline”

This is equal to the best-known performance by AI at the time of dataset creation. -

100%

This mark is equal to human performance on the dataset.

By creating a scale between these two points, the progress of AI models on each dataset could be tracked. Each point on a line signifies a best result and as the line trends upwards, AI models get closer and closer to matching human performance.

Below is a table of when AI started matching human performance across all eight skills:

A key observation from the chart is how much progress has been made since 2010. In fact many of these databases—like SQuAD, GLUE, and HellaSwag—didn’t exist before 2015.

In response to benchmarks being rendered obsolete, some of the newer databases are constantly being updated with new and relevant data points. This is why AI models technically haven’t matched human performance in some areas (grade school math and code generation) yet—though they are well on their way.

What’s Led to AI Outperforming Humans?

But what has led to such speedy growth in AI’s abilities in the last few years?

Thanks to revolutions in computing power, data availability, and better algorithms, AI models are faster, have bigger datasets to learn from, and are optimized for efficiency compared to even a decade ago.

This is why headlines routinely talk about AI language models matching or beating human performance on standardized tests. In fact, a key problem for AI developers is that their models keep beating benchmark databases devised to test them, but still somehow fail real world tests.

Since further computing and algorithmic gains are expected in the next few years, this rapid progress is likely to continue. However, the next potential bottleneck to AI’s progress might not be AI itself, but a lack of data for models to train on.

Loading…