By Daniel Nuccio of The College Fix

"Used by governments and Big Tech to shape public opinion by restricting certain viewpoints or promoting others’: report

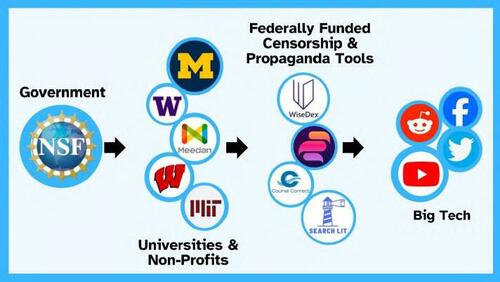

The National Science Foundation is paying universities using taxpayer money to create AI tools that can be used to censor Americans on various social media platforms, according to members of the House.

University of Michigan, the University of Wisconsin-Madison, and MIT are among the universities cited in the House Judiciary Committee and the Select Subcommittee on the Weaponization of the Federal Government interim report.

It details the foundation’s “funding of AI-powered censorship and propaganda tools, and its repeated efforts to hide its actions and avoid political and media scrutiny.”

“NSF has been issuing multi-million-dollar grants to university and non-profit research teams” for the purpose of developing AI-powered technologies “that can be used by governments and Big Tech to shape public opinion by restricting certain viewpoints or promoting others,” states the report, released last month.

Funding for the projects began in 2021 and was issued through the NSF’s Convergence Accelerator grant program, which was initially launched in 2019 to develop interdisciplinary solutions to major challenges of national and societal importance such as those pertaining to AI and quantum technology, it states.

In 2021, however, the NSF introduced “Track F: Trust & Authenticity in Communication Systems.”

The NSF’s 2021 Convergence Accelerator program solicitation stated the goal of Track F projects was to “develop prototype(s) of novel research platforms forming integrated collection(s) of tools, techniques, and educational materials and programs to support increased citizen trust in public information of all sorts (health, climate, news, etc.), through more effectively preventing, mitigating, and adapting to critical threats in our communications systems.”

Specifically, the grant solicitation singled out the threats posed by hackers and misinformation.

That September, the select subcommittee report notes, the NSF awarded “twelve Track F teams $750,000 each (a total of $9 million) to develop and refine their project ideas and build partnerships.” The following year, the NSF selected six of the 12 teams to receive an additional $5 million each for their respective projects, according to the report.

Projects from the University of Michigan, University of Wisconsin-Madison, MIT, and Meedan, a nonprofit that specializes in developing software to counter misinformation, are highlighted by the select subcommittee.

Collectively, these four projects received $13 million from the NSF, it states.

“The University of Michigan intended to use the federal funding to develop its tool ‘WiseDex,’ which could use AI technology to assess the veracity of content on social media and assist large social media platforms with what content should be removed or otherwise censored,” it states.

The University of Wisconsin-Madison’s Course Correct, which was featured in an article from The College Fix last year, was “intended to aid reporters, public health organizations, election administration officials, and others to address so-called misinformation on topics such as U.S. elections and COVID-19 vaccine hesitancy.”

MIT’s Search Lit, as described in the select subcommittee’s report, was developed as an intervention to help educate groups of Americans the researchers believed were most vulnerable to misinformation such as conservatives, minorities, rural Americans, older adults, and military families.

Meedan, according to its website, used its funding to develop “easy-to-use, mobile-friendly tools [that] will allow AAPI [Asian-American and Pacific Islander] community members to forward potentially harmful content to tiplines and discover relevant context explainers, fact-checks, media literacy materials, and other misinformation interventions.”

According to the select committee’s report, “Once empowered with taxpayer dollars, the pseudo-science researchers wield the resources and prestige bestowed upon them by the federal government against any entities that resist their censorship projects.”

“In some instances,” the report states, “if a social media company fails to act fast enough to change a policy or remove what the researchers perceive to be misinformation on its platform, disinformation researchers will issue blogposts or formal papers to ‘generate a communications moment’ (i.e., negative press coverage) for the platform, seeking to coerce it into compliance with their demands.”

Efforts were made via email to contact senior members of the three university research teams, as well as a representative from Meedan, regarding the portrayal of their work in the select subcommittee’s report.

Paul Resnick, who serves as the WiseDex project director at the University of Michigan, referred The College Fix to the WiseDex website.

“Social media companies have policies against harmful misinformation. Unfortunately, enforcement is uneven, especially for non-English content,” states the site. “WiseDex harnesses the wisdom of crowds and AI techniques to help flag more posts [than humans can]. The result is more comprehensive, equitable, and consistent enforcement, significantly reducing the spread of misinformation.”

A video on the site presents the tool as a means to help social media sites flag posts that violate platform policies and subsequently attach warnings to or remove the posts. Posts portraying approved COVID-19 vaccines as potentially dangerous are used as an example.

Michael Wagner from the University of Wisconsin-Madison also responded to The Fix, writing, “It is interesting to be included in a report that claims to be about censorship when our project censors exactly no one.”

According to the select subcommittee report, some of the researchers associated with Track F and similar projects, however, privately acknowledged efforts to combat misinformation were inherently political and a form of censorship.

Yet, following negative coverage of Track F projects, depicting them as politically motivated and their products as government-funded censorship tools, the report notes, the NSF began discussing media and outreach strategy with grant recipients.

Notes from a pair of Track F media strategy planning sessions included in Appendix B of the select subcommittee’s report recommended researchers, when interacting with the media, focus on the “pro-democracy” and “non-ideological” nature of their work, “Give examples of both sides,” and “use sports metaphors.”

The select subcommittee report also highlights that there were discussions of having a media blacklist, although at least one researcher from the University of Michigan objected to this, citing the potential optics.

By Daniel Nuccio of The College Fix

“Used by governments and Big Tech to shape public opinion by restricting certain viewpoints or promoting others’: report

The National Science Foundation is paying universities using taxpayer money to create AI tools that can be used to censor Americans on various social media platforms, according to members of the House.

University of Michigan, the University of Wisconsin-Madison, and MIT are among the universities cited in the House Judiciary Committee and the Select Subcommittee on the Weaponization of the Federal Government interim report.

It details the foundation’s “funding of AI-powered censorship and propaganda tools, and its repeated efforts to hide its actions and avoid political and media scrutiny.”

“NSF has been issuing multi-million-dollar grants to university and non-profit research teams” for the purpose of developing AI-powered technologies “that can be used by governments and Big Tech to shape public opinion by restricting certain viewpoints or promoting others,” states the report, released last month.

Funding for the projects began in 2021 and was issued through the NSF’s Convergence Accelerator grant program, which was initially launched in 2019 to develop interdisciplinary solutions to major challenges of national and societal importance such as those pertaining to AI and quantum technology, it states.

In 2021, however, the NSF introduced “Track F: Trust & Authenticity in Communication Systems.”

The NSF’s 2021 Convergence Accelerator program solicitation stated the goal of Track F projects was to “develop prototype(s) of novel research platforms forming integrated collection(s) of tools, techniques, and educational materials and programs to support increased citizen trust in public information of all sorts (health, climate, news, etc.), through more effectively preventing, mitigating, and adapting to critical threats in our communications systems.”

Specifically, the grant solicitation singled out the threats posed by hackers and misinformation.

That September, the select subcommittee report notes, the NSF awarded “twelve Track F teams $750,000 each (a total of $9 million) to develop and refine their project ideas and build partnerships.” The following year, the NSF selected six of the 12 teams to receive an additional $5 million each for their respective projects, according to the report.

Projects from the University of Michigan, University of Wisconsin-Madison, MIT, and Meedan, a nonprofit that specializes in developing software to counter misinformation, are highlighted by the select subcommittee.

Collectively, these four projects received $13 million from the NSF, it states.

“The University of Michigan intended to use the federal funding to develop its tool ‘WiseDex,’ which could use AI technology to assess the veracity of content on social media and assist large social media platforms with what content should be removed or otherwise censored,” it states.

The University of Wisconsin-Madison’s Course Correct, which was featured in an article from The College Fix last year, was “intended to aid reporters, public health organizations, election administration officials, and others to address so-called misinformation on topics such as U.S. elections and COVID-19 vaccine hesitancy.”

MIT’s Search Lit, as described in the select subcommittee’s report, was developed as an intervention to help educate groups of Americans the researchers believed were most vulnerable to misinformation such as conservatives, minorities, rural Americans, older adults, and military families.

Meedan, according to its website, used its funding to develop “easy-to-use, mobile-friendly tools [that] will allow AAPI [Asian-American and Pacific Islander] community members to forward potentially harmful content to tiplines and discover relevant context explainers, fact-checks, media literacy materials, and other misinformation interventions.”

According to the select committee’s report, “Once empowered with taxpayer dollars, the pseudo-science researchers wield the resources and prestige bestowed upon them by the federal government against any entities that resist their censorship projects.”

“In some instances,” the report states, “if a social media company fails to act fast enough to change a policy or remove what the researchers perceive to be misinformation on its platform, disinformation researchers will issue blogposts or formal papers to ‘generate a communications moment’ (i.e., negative press coverage) for the platform, seeking to coerce it into compliance with their demands.”

Efforts were made via email to contact senior members of the three university research teams, as well as a representative from Meedan, regarding the portrayal of their work in the select subcommittee’s report.

Paul Resnick, who serves as the WiseDex project director at the University of Michigan, referred The College Fix to the WiseDex website.

“Social media companies have policies against harmful misinformation. Unfortunately, enforcement is uneven, especially for non-English content,” states the site. “WiseDex harnesses the wisdom of crowds and AI techniques to help flag more posts [than humans can]. The result is more comprehensive, equitable, and consistent enforcement, significantly reducing the spread of misinformation.”

A video on the site presents the tool as a means to help social media sites flag posts that violate platform policies and subsequently attach warnings to or remove the posts. Posts portraying approved COVID-19 vaccines as potentially dangerous are used as an example.

Michael Wagner from the University of Wisconsin-Madison also responded to The Fix, writing, “It is interesting to be included in a report that claims to be about censorship when our project censors exactly no one.”

According to the select subcommittee report, some of the researchers associated with Track F and similar projects, however, privately acknowledged efforts to combat misinformation were inherently political and a form of censorship.

Yet, following negative coverage of Track F projects, depicting them as politically motivated and their products as government-funded censorship tools, the report notes, the NSF began discussing media and outreach strategy with grant recipients.

Notes from a pair of Track F media strategy planning sessions included in Appendix B of the select subcommittee’s report recommended researchers, when interacting with the media, focus on the “pro-democracy” and “non-ideological” nature of their work, “Give examples of both sides,” and “use sports metaphors.”

The select subcommittee report also highlights that there were discussions of having a media blacklist, although at least one researcher from the University of Michigan objected to this, citing the potential optics.

Loading…