Former U.S. president Donald Trump posing with Black voters, President Joe Biden discouraging people from voting via telephone or the Pope in a puffy white jacket: Deepfakes of videos, photos and audio recordings have become widespread on various internet platforms, aided by the technological advances of large language models like Midjourney, Google's Gemini or OpenAI's ChatGPT.

As Statista's Florian Zandt details below, with the right prompt fine-tuning, everyone can create seemingly real images or make the voices of prominent political or economic figures and entertainers say anything they want. While creating a deepfake is not a criminal offense on its own, many governments are nevertheless moving towards stronger regulation when using artificial intelligence to prevent harm to the parties involved.

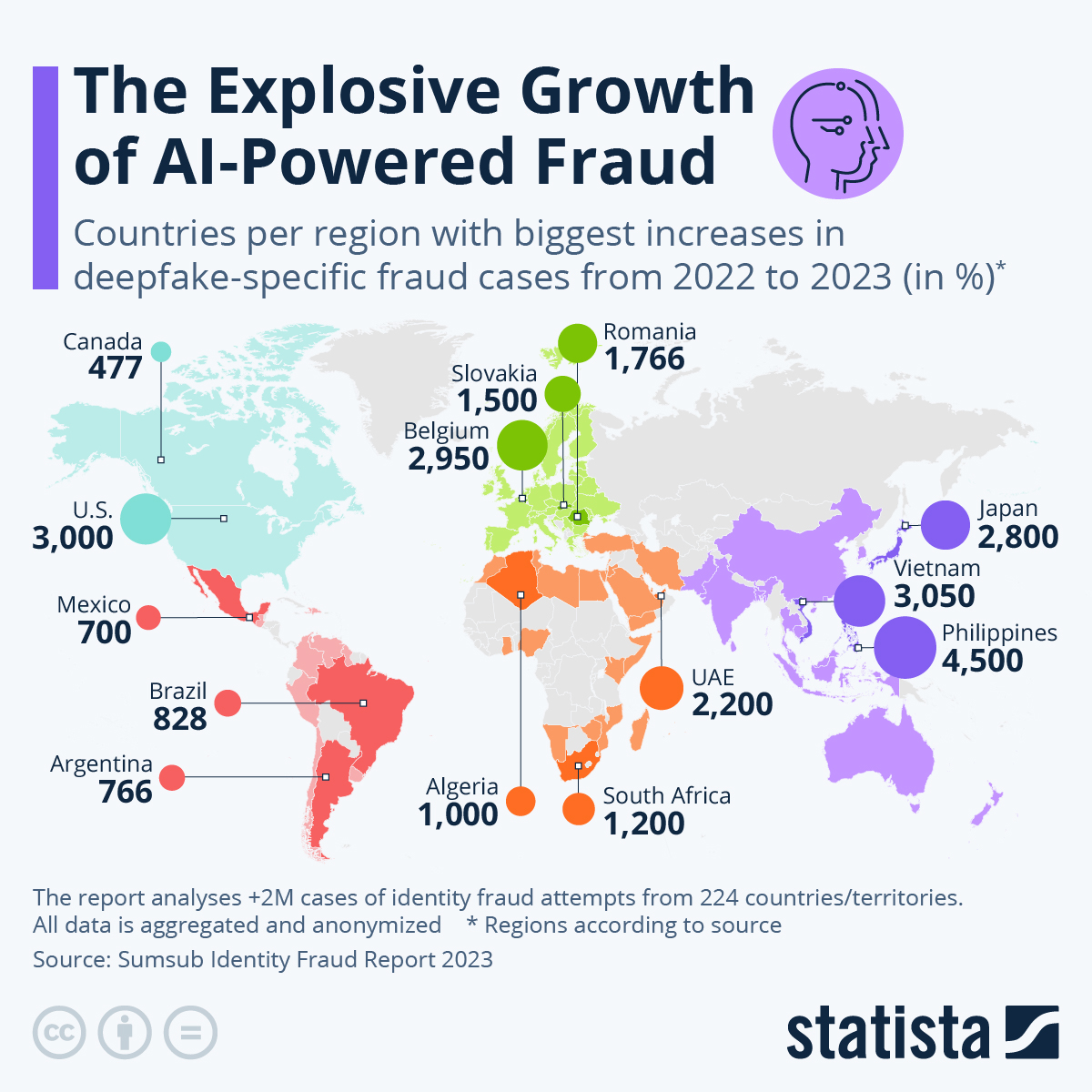

Apart from the main avenue of deepfakes, creating non-consensual pornographic content involving mostly female celebrities, this technology can also be used to commit identity fraud by manufacturing fake IDs or impersonating others over the phone. As Statista's chart based on the most recent annual report of identity verification provider Sumsub shows, deepfake-related identity fraud cases have skyrocketed between 2022 and 2023 in many countries around the world.

You will find more infographics at Statista

For example, the number of fraud attempts in the Philippines rose by 4,500 percent year over year, followed by nations like Vietnam, the United States and Belgium. With the capabilities of so-called artificial intelligence potentially increasing even further, as is evidenced by products like AI video generator Sora, deepfake fraud attempts could also spill over into other areas.

"We’ve seen deepfakes become more and more convincing in recent years and this will only continue and branch out into new types of fraud, as seen with voice deepfakes", says Pavel Goldman-Kalaydin, Sumsub's Head of Artificial Intelligence and Machine Learning, in the aforementioned report.

"Both consumers and companies need to remain hyper-vigilant to synthetic fraud and look to multi-layered anti-fraud solutions, not only deepfake detection."

These assessments are shared by many cybersecurity experts. For example, a survey among 199 cybersecurity leaders attending the World Economic Forum Annual Meeting on Cybersecurity in 2023 showed 46 percent of respondents being most concerned about the "advance of adversarial capabilities – phishing, malware development, deepfakes" in terms of the risks artificial intelligence poses for cybersecurity in the future.

Former U.S. president Donald Trump posing with Black voters, President Joe Biden discouraging people from voting via telephone or the Pope in a puffy white jacket: Deepfakes of videos, photos and audio recordings have become widespread on various internet platforms, aided by the technological advances of large language models like Midjourney, Google’s Gemini or OpenAI‘s ChatGPT.

As Statista’s Florian Zandt details below, with the right prompt fine-tuning, everyone can create seemingly real images or make the voices of prominent political or economic figures and entertainers say anything they want. While creating a deepfake is not a criminal offense on its own, many governments are nevertheless moving towards stronger regulation when using artificial intelligence to prevent harm to the parties involved.

Apart from the main avenue of deepfakes, creating non-consensual pornographic content involving mostly female celebrities, this technology can also be used to commit identity fraud by manufacturing fake IDs or impersonating others over the phone. As Statista’s chart based on the most recent annual report of identity verification provider Sumsub shows, deepfake-related identity fraud cases have skyrocketed between 2022 and 2023 in many countries around the world.

You will find more infographics at Statista

For example, the number of fraud attempts in the Philippines rose by 4,500 percent year over year, followed by nations like Vietnam, the United States and Belgium. With the capabilities of so-called artificial intelligence potentially increasing even further, as is evidenced by products like AI video generator Sora, deepfake fraud attempts could also spill over into other areas.

“We’ve seen deepfakes become more and more convincing in recent years and this will only continue and branch out into new types of fraud, as seen with voice deepfakes”, says Pavel Goldman-Kalaydin, Sumsub’s Head of Artificial Intelligence and Machine Learning, in the aforementioned report.

“Both consumers and companies need to remain hyper-vigilant to synthetic fraud and look to multi-layered anti-fraud solutions, not only deepfake detection.”

These assessments are shared by many cybersecurity experts. For example, a survey among 199 cybersecurity leaders attending the World Economic Forum Annual Meeting on Cybersecurity in 2023 showed 46 percent of respondents being most concerned about the “advance of adversarial capabilities – phishing, malware development, deepfakes” in terms of the risks artificial intelligence poses for cybersecurity in the future.

Loading…