Authored by Makai Allbert via The Epoch Times (emphasis ours),

As Mohamed Elmasry, emeritus professor of computer engineering at the University of Waterloo, watched his 11 and 10-year-old grandchildren tapping away on their smartphones, he posed a simple question: “What’s one-third of nine?”

Instead of taking a moment to think, they immediately opened their calculator apps, he writes in his book “iMind Artificial and Real Intelligence.”

Later, fresh from a family vacation in Cuba, he asked them to name the island’s capital. Once again, their fingers flew to their devices, “Googling” the answer rather than recalling their recent experience.

With 60 percent of the global population—and 97 percent of those under 30—using smartphones, technology has inadvertently become an extension of our thinking process.

However, everything comes at a cost. Cognitive outsourcing, which involves relying on external systems to collect or process information, may increase one’s risk of cognitive decline.

Habitual GPS (global positioning system) use, for example, has been linked to a significant decrease in spatial memory, reducing one’s ability to navigate independently. As AI applications such as ChatGPT become a household norm—with 55 percent of Americans reporting regular AI use—recent studies found it is resulting in impaired critical thinking skills, dependency, loss of decision-making, and laziness.

Experts emphasize cultivating and prioritizing innate human skills that technology cannot replicate.

Neglected Real Intelligence

Referring to his grandkids and their overreliance on technology, Elmasry explains that they are far from “stupid.”

The problem is they are not using their real intelligence.

They, and the rest of their generation, have grown accustomed to using apps and digital devices—unconsciously defaulting to internet search engines such as Google rather than thinking it through.

Just as physical muscles atrophy without use, so too do our cognitive abilities weaken when we let technology think for us.

A telling case is now called the “Google effect,” or digital amnesia, as shown in a 2011 study from Columbia University.

Betsy Sparrow and colleagues at Columbia found that individuals tend to easily forget information that is readily available on the Internet.

Their findings showed that people are more likely to remember things they think are not available online. They are also better at recalling where to find information on the Internet than recalling the information itself.

A 2021 study further tested the effects of Googling and found that participants who relied on search engines such as Google performed worse on learning assessments and memory recall than those who did not search online.

The study also showed that Googlers often had higher confidence that they had “mastered” the study material, indicating an overestimation in learning and ignorance of their learning deficit. Their overconfidence might be the result of having an “illusion of knowledge” bias—accessing information through search engines creates a false sense of personal expertise and diminishes people’s effort to learn.

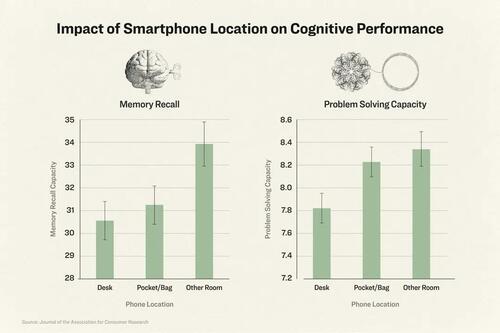

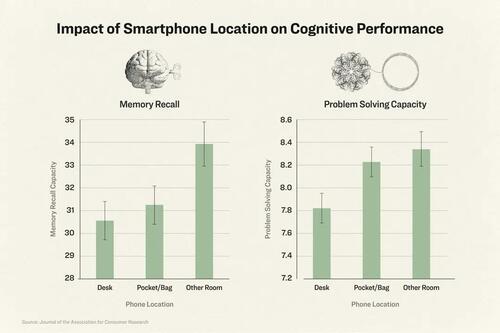

Overreliance on technology is part of the problem, but having it around may be just as harmful. A study published in the Journal of the Association for Consumer Research discovered that “the mere presence” of a smartphone reduced “available cognitive capacity”—even if the phone was off or placed in a bag.

This “brain drain” effect likely occurs because the presence of a smartphone taps into our cognitive resources, subtly allocating our attention and making it harder to concentrate fully on the task at hand, researchers say. Excessive tech use does not only impair our cognition, clinicians and researchers have also noticed it being linked to impaired social intelligence—the innate aspects that make us human.

Becoming Machine-Like

In the United States, children aged 8 to 12 typically spend 4 to 6 hours a day on screens, while teenagers may spend up to 9 hours daily on screens. Further, 44 percent of teenagers feel anxious, and 39 percent feel lonely without their phones.

Excessive screen time reduces social interactions and emotional intelligence and has been linked to autistic-like symptoms, with longer durations of screen use correlated with more severe symptoms.

Dr. Jason Liu, a medical doctor who also has a doctorate in neuroscience, is a research scientist and founding president of the Mind-Body Science Institute International. Liu told The Epoch Times he is particularly concerned about children’s use of digital media.

He said he has observed irregularities in his young patients who spend excessive time in the digital world—noticing their mechanical speech, lack of emotional expression, poor eye contact, and difficulty forming genuine human connections. Many exhibit ADHD symptoms, responding with detachment and struggling with emotional fragility.

“We should not let technology replace our human nature,” said Liu.

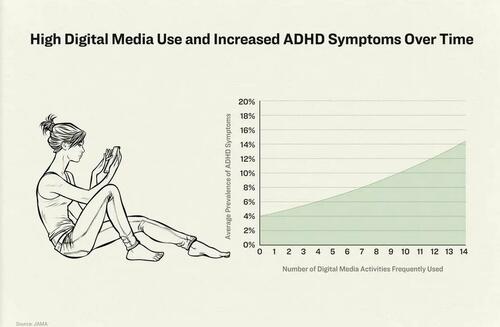

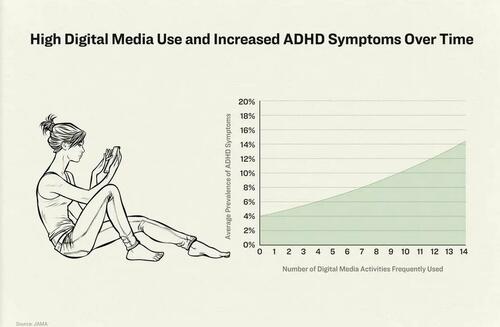

Corroborating Liu’s observations, a JAMA study followed about 3,000 adolescents with no prior ADHD symptoms over 24 months and found that a higher frequency of modern digital media use was associated with significantly higher odds of developing ADHD symptoms.

As early as 1988, scientists introduced the concept of the “Internet Paradox,” a phenomenon in which the Internet, despite being a “social tool,” leads to antisocial behavior.

Observing 73 households during their first years online, researchers noted that increased Internet use was associated with reduced communication with family members, smaller social circles, and heightened depression and loneliness.

However, a three-year follow-up found that most of the adverse effects dissipated. The researcher explained this through a “rich get richer” model, where introverts experienced more negative effects from the Internet, while extroverts, with stronger social networks, benefited more and became more engaged in online communities, mitigating negative impacts.

Manuel Garcia-Garcia, global lead of neuroscience at Ipsos, who holds a doctorate in neuroscience, told The Epoch Times that human-to-human connections are vital for building deeper connections, while digital communication tools facilitate connectivity, they can lead to superficial interactions and impede social cues.

Supporting Liu’s observation of patients becoming “machine-like,” a Facebook emotional contagion experiment, conducted on nearly 700,000 users, manipulated news feeds to show more positive or negative posts. Users exposed to more positive content posted more positive updates, while those seeing more negative content posted more negative updates.

This demonstrated that technology can nudge human behavior in subtle yet systematic ways. This nudging, according to experts, can make our actions and emotions predictable, similar to programmed responses.

The Eureka Moment

“Sitting on your shoulders is the most complicated object in the known universe,” stated theoretical physicist Michio Kaku.

While the most advanced technologies, including AI, may appear sophisticated, they are incommensurate with the human mind.

“AI is very smart, but not really,” Kathy Hirsh-Pasek, professor of psychology at Temple University and senior fellow at the Brookings Institution, told The Epoch Times. “It’s a machine algorithm that’s really good at predicting the next word. Full stop.”

The human brain is constructed developmentally, and it’s “not just given to us like a computer is in a box,” Hirsh-Pasek said. Our environment and experiences shape the intricate web of neural connections, 100 billion neurons interconnected by 100 trillion synapses.

Human learning thrives on meaning, emotion, and social interaction. Hirsh-Pasek notes that computer systems like AI are indifferent to these elements. Machines only “learn” with the data they are fed, optimizing for the best possible output.

A cornerstone of human intelligence is the ability to learn through our senses, said Jessica Russo, a clinical psychologist, in an interview with The Epoch Times. When we interact with our environment, we process a large amount of data from what we see, hear, taste, and touch.

AI systems cannot go beyond the information they have been given, and they, therefore, cannot truly produce anything new, Hirsh-Pasek said.

“[AI] is an exquisitely good synthesizer. It’s not an exquisitely good thinker,” she said.

Humans, however, can.

Read the rest here...

Authored by Makai Allbert via The Epoch Times (emphasis ours),

As Mohamed Elmasry, emeritus professor of computer engineering at the University of Waterloo, watched his 11 and 10-year-old grandchildren tapping away on their smartphones, he posed a simple question: “What’s one-third of nine?”

Instead of taking a moment to think, they immediately opened their calculator apps, he writes in his book “iMind Artificial and Real Intelligence.”

Later, fresh from a family vacation in Cuba, he asked them to name the island’s capital. Once again, their fingers flew to their devices, “Googling” the answer rather than recalling their recent experience.

With 60 percent of the global population—and 97 percent of those under 30—using smartphones, technology has inadvertently become an extension of our thinking process.

However, everything comes at a cost. Cognitive outsourcing, which involves relying on external systems to collect or process information, may increase one’s risk of cognitive decline.

Habitual GPS (global positioning system) use, for example, has been linked to a significant decrease in spatial memory, reducing one’s ability to navigate independently. As AI applications such as ChatGPT become a household norm—with 55 percent of Americans reporting regular AI use—recent studies found it is resulting in impaired critical thinking skills, dependency, loss of decision-making, and laziness.

Experts emphasize cultivating and prioritizing innate human skills that technology cannot replicate.

Neglected Real Intelligence

Referring to his grandkids and their overreliance on technology, Elmasry explains that they are far from “stupid.”

The problem is they are not using their real intelligence.

They, and the rest of their generation, have grown accustomed to using apps and digital devices—unconsciously defaulting to internet search engines such as Google rather than thinking it through.

Just as physical muscles atrophy without use, so too do our cognitive abilities weaken when we let technology think for us.

A telling case is now called the “Google effect,” or digital amnesia, as shown in a 2011 study from Columbia University.

Betsy Sparrow and colleagues at Columbia found that individuals tend to easily forget information that is readily available on the Internet.

Their findings showed that people are more likely to remember things they think are not available online. They are also better at recalling where to find information on the Internet than recalling the information itself.

A 2021 study further tested the effects of Googling and found that participants who relied on search engines such as Google performed worse on learning assessments and memory recall than those who did not search online.

The study also showed that Googlers often had higher confidence that they had “mastered” the study material, indicating an overestimation in learning and ignorance of their learning deficit. Their overconfidence might be the result of having an “illusion of knowledge” bias—accessing information through search engines creates a false sense of personal expertise and diminishes people’s effort to learn.

Overreliance on technology is part of the problem, but having it around may be just as harmful. A study published in the Journal of the Association for Consumer Research discovered that “the mere presence” of a smartphone reduced “available cognitive capacity”—even if the phone was off or placed in a bag.

This “brain drain” effect likely occurs because the presence of a smartphone taps into our cognitive resources, subtly allocating our attention and making it harder to concentrate fully on the task at hand, researchers say. Excessive tech use does not only impair our cognition, clinicians and researchers have also noticed it being linked to impaired social intelligence—the innate aspects that make us human.

Becoming Machine-Like

In the United States, children aged 8 to 12 typically spend 4 to 6 hours a day on screens, while teenagers may spend up to 9 hours daily on screens. Further, 44 percent of teenagers feel anxious, and 39 percent feel lonely without their phones.

Excessive screen time reduces social interactions and emotional intelligence and has been linked to autistic-like symptoms, with longer durations of screen use correlated with more severe symptoms.

Dr. Jason Liu, a medical doctor who also has a doctorate in neuroscience, is a research scientist and founding president of the Mind-Body Science Institute International. Liu told The Epoch Times he is particularly concerned about children’s use of digital media.

He said he has observed irregularities in his young patients who spend excessive time in the digital world—noticing their mechanical speech, lack of emotional expression, poor eye contact, and difficulty forming genuine human connections. Many exhibit ADHD symptoms, responding with detachment and struggling with emotional fragility.

“We should not let technology replace our human nature,” said Liu.

Corroborating Liu’s observations, a JAMA study followed about 3,000 adolescents with no prior ADHD symptoms over 24 months and found that a higher frequency of modern digital media use was associated with significantly higher odds of developing ADHD symptoms.

As early as 1988, scientists introduced the concept of the “Internet Paradox,” a phenomenon in which the Internet, despite being a “social tool,” leads to antisocial behavior.

Observing 73 households during their first years online, researchers noted that increased Internet use was associated with reduced communication with family members, smaller social circles, and heightened depression and loneliness.

However, a three-year follow-up found that most of the adverse effects dissipated. The researcher explained this through a “rich get richer” model, where introverts experienced more negative effects from the Internet, while extroverts, with stronger social networks, benefited more and became more engaged in online communities, mitigating negative impacts.

Manuel Garcia-Garcia, global lead of neuroscience at Ipsos, who holds a doctorate in neuroscience, told The Epoch Times that human-to-human connections are vital for building deeper connections, while digital communication tools facilitate connectivity, they can lead to superficial interactions and impede social cues.

Supporting Liu’s observation of patients becoming “machine-like,” a Facebook emotional contagion experiment, conducted on nearly 700,000 users, manipulated news feeds to show more positive or negative posts. Users exposed to more positive content posted more positive updates, while those seeing more negative content posted more negative updates.

This demonstrated that technology can nudge human behavior in subtle yet systematic ways. This nudging, according to experts, can make our actions and emotions predictable, similar to programmed responses.

The Eureka Moment

“Sitting on your shoulders is the most complicated object in the known universe,” stated theoretical physicist Michio Kaku.

While the most advanced technologies, including AI, may appear sophisticated, they are incommensurate with the human mind.

“AI is very smart, but not really,” Kathy Hirsh-Pasek, professor of psychology at Temple University and senior fellow at the Brookings Institution, told The Epoch Times. “It’s a machine algorithm that’s really good at predicting the next word. Full stop.”

The human brain is constructed developmentally, and it’s “not just given to us like a computer is in a box,” Hirsh-Pasek said. Our environment and experiences shape the intricate web of neural connections, 100 billion neurons interconnected by 100 trillion synapses.

Human learning thrives on meaning, emotion, and social interaction. Hirsh-Pasek notes that computer systems like AI are indifferent to these elements. Machines only “learn” with the data they are fed, optimizing for the best possible output.

A cornerstone of human intelligence is the ability to learn through our senses, said Jessica Russo, a clinical psychologist, in an interview with The Epoch Times. When we interact with our environment, we process a large amount of data from what we see, hear, taste, and touch.

AI systems cannot go beyond the information they have been given, and they, therefore, cannot truly produce anything new, Hirsh-Pasek said.

“[AI] is an exquisitely good synthesizer. It’s not an exquisitely good thinker,” she said.

Humans, however, can.

Read the rest here…

Loading…